Meta To Demo Retinal Varifocal & Light Field Passthrough

[ad_1]

Meta will demo two new research prototype headsets at SIGGRAPH 2023 next week.

SIGGRAPH is a yearly conference where researchers present breakthroughs in computer graphics hardware & software. Last year Meta showed off Starburst, a HDR display research prototype with 20,000 nits brightness, the highest of any known headset.

This year Meta will show two prototype headsets: Butterscotch Varifocal will showcase near-retinal resolution and varifocal optics, while Flamera proves out a novel reprojection-free approach to real world passthrough using light fields.

Starburst: Eyes-In With Meta’s 20K Nit HDR Display Tech

Is high-dynamic range (HDR) the key to next generation VR displays? Hands-on time with Meta’s latest demo and an interview with the head of display systems research suggests it’ll be pretty key. Read on for details. At the recent SIGGRAPH conference in Vancouver David Heaney and I went

UploadVR’s Ian Hamilton and I went eyes-in with Starburst last year, and I’m travelling to SIGGRAPH 2023 next week to try out the new prototypes this year too.

To be clear, both Butterscotch Varifocal and Flamera are research prototypes intended for investigating the feeling of far-future headset technology. Neither are intended to become actual products, and Meta specifically warns the approach each takes «may never make their way into a consumer-facing product».

Butterscotch Varifocal: Retinal Resolution With Variable Focus

Angular resolution is the true measure of the resolution of head-mounted displays because it accounts for differences in field of view between headsets by describing how many pixels you actually see in each degree of your vision, the pixels per degree (PPD). For example, if two headsets use the same display but one has a field of view twice as wide, it would have the same resolution yet just half the PPD.

‘Retinal’ is a term used to describe angular resolution exceeding what the human eye can discern. The generally accepted threshold is 60 PPD.

No consumer VR headset today comes close to this – Quest Pro reaches around 22 PPD, while Bigscreen Beyond is claimed at 32 PPD and the $1990 Varjo Aero reaches 35. Varjo’s $5500 business headsets do actually reach retinal resolution, but only in a tiny rectangle in the center of the view.

Last year, Meta showed off a research prototype achieving 55 PDD called Butterscotch. Its stated purpose was to demonstrate and research the feeling of retinal resolution, and it has a field of view only around half as wide as Quest 2.

Butterscotch Varifocal is the next evolution of Butterscotch, combining its retinal angular resolution with the varifocal optics of the Half-Dome prototype.

Half-Dome was first shown in 2018. It incorporated eye tracking to rapidly mechanically move the displays forward and back to dynamically adjust focus.

All current headsets on the market have fixed focus lenses. Each eye gets a separate perspective but the image is focused at a fixed focal distance, usually a few meters away. Your eyes will point (converge or diverge) toward the virtual object you’re looking at, but can’t actually focus (accommodate) to the virtual distance to the object.

This is called the vergence-accommodation conflict. It causes eye strain and can make virtual objects look blurry close up. Solving it is important to make VR feel more real and be more visually comfortable for long duration use.

Butterscotch Varifocal is capable of supporting accommodation from 25 cm to infinity, Meta says, so you can focus on objects up close or in the far distance.

Meta claims the combination of retinal resolution and varifocal optics means the headset offers «crisp, clear visuals that rival what you can see with the naked eye».

Flamera: Reprojection-Free Lightfield Passthrough

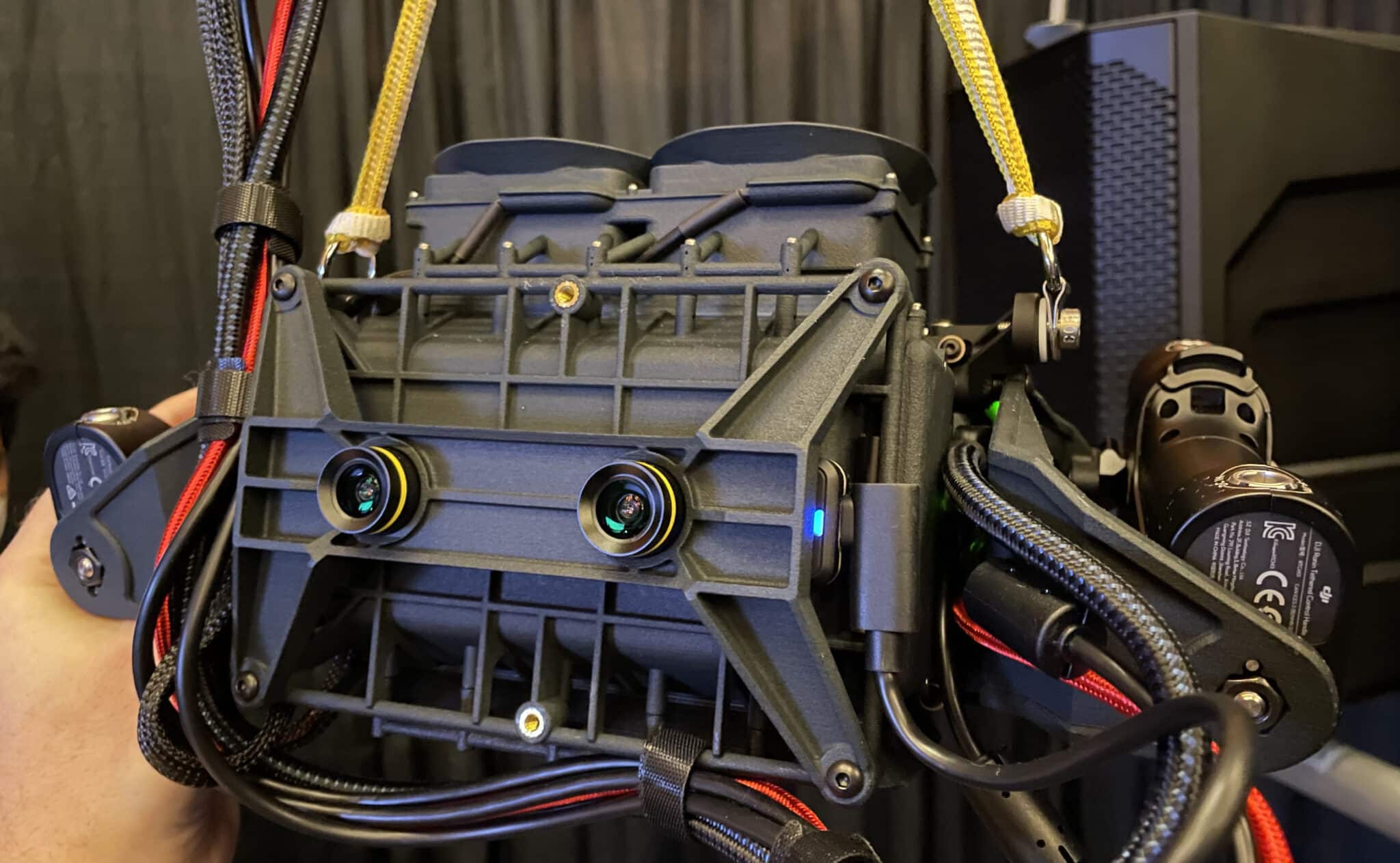

Flamera is a prototype of reprojection-free passthrough. Meta describes it as a «computational camera that uses light field technology».

Headsets like Quest Pro, Apple Vision Pro, and the upcoming Quest 3 use cameras on the front to show you the real world. But since those cameras are in a different position to your real eyes, they have to use image processing algorithms to reproject the camera views to show what your eyes would be seeing.

This is an imperfect process that adds latency and results in image processing artefacts – even though it is getting better with newer hardware.

Flamera aims to bypass the reprojection approach entirely with a from-scratch hardware design built from the ground up with passthrough in mind. Here’s how Meta describes how it works:

Unlike a traditional light field camera featuring a lens array, Flamera (think “flat camera”) strategically places an aperture behind each lens in the array. Those apertures physically block unwanted rays of light so only the desired rays reach the eyes (whereas a traditional light field camera would capture more than just those light rays, resulting in unacceptably low image resolution). The architecture used also concentrates the finite sensor pixels on the relevant parts of the light field, resulting in much higher resolution images.

The raw sensor data ends up looking like small circles of light that each contain just a part of the desired view of the physical world outside the headset. Flamera rearranges the pixels, estimating a coarse depth map to enable a depth-dependent reconstruction.

This, Meta claims, results in passthrough with much lower latency and much fewer artifacts.

[ad_2]

Source link